8: Demand Paging

Demand Paging

Modern programs require a lot of physical memory, but they don’t use all their memory all of the time.

90-10 rule: programs spend 90% of their time in 10% of their code.

Use main memory as cache for disk

Demand Paging as Cache

- What is block size

- 1 page

- What is organization of this cache (i.e. direct-mapped, set-associative, fully-associative)?

- fully associative: arbitrary virtual \(\to\) physical mapping

- How do we find a page in the cache when look for it?

- first check TLB, then page-table traversal

- What is page replacement policy? (i.e. LRU, Random…)

- What happens on a miss?

- go to lower level to fill miss (i.e. disk)

- What happens on a write? (write-through, write back)

- write-back – need dirty bit

Memory-Mapped Files

Def. Memory-mapped Files (内存映射文件) is a segment of virtual memory that has been assigned a direct byte-for-byte correlation with some portion of a file or file-like resource.

- a special case of demand paging

- a replacement for syscall

read()/write()

Pros

- transparency - the program can use pointers to access those data

- zero copy I/O - the OS just changes the page table entries without copying the data into memory;

read()/write()needs to copy the data twice (disk-kernel-user) - pipelining - the program can start executing as soon as the page table has been set

- inter-process communication - sharing becomes easy

- large files - which pages shall be in memory? OS handles it for you

Cons

- frequent page faults

Use m-map when

- random access: access data in a non-sequential manner

- large files: for very large files that may not fit into memory

- multiple processes: data sharing across processes

- memory-mapped I/O and automatic caching

Instead, use read()/write() when

- small files

- streaming data access

- portability: not every OS has m-map

Implementation of Memory-Mapped Files

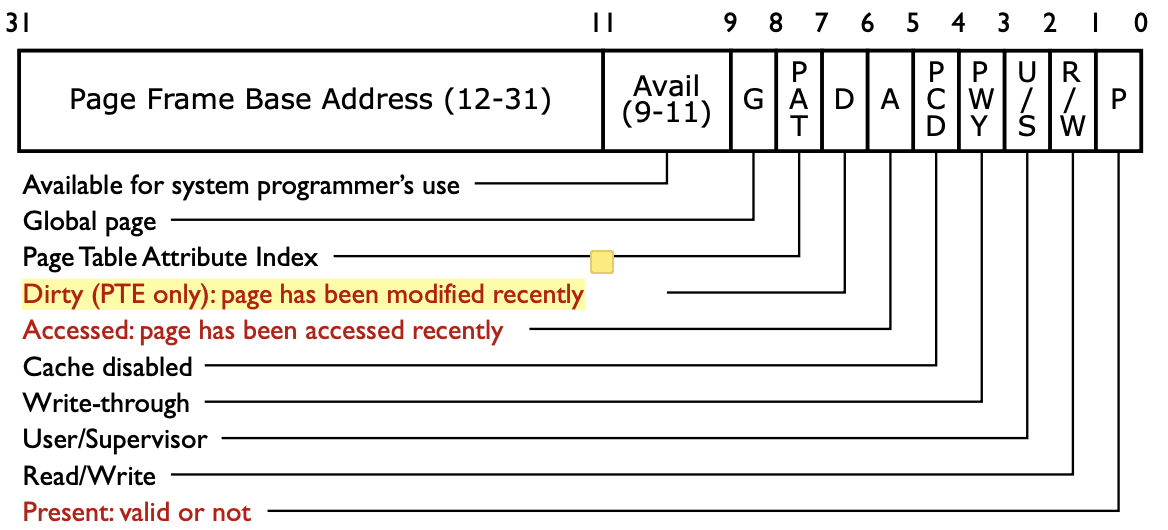

Figure 1: Page Table Entry

D(dirty): PTE only, page has been modified recentlyA(accessed): page has been accessed recently

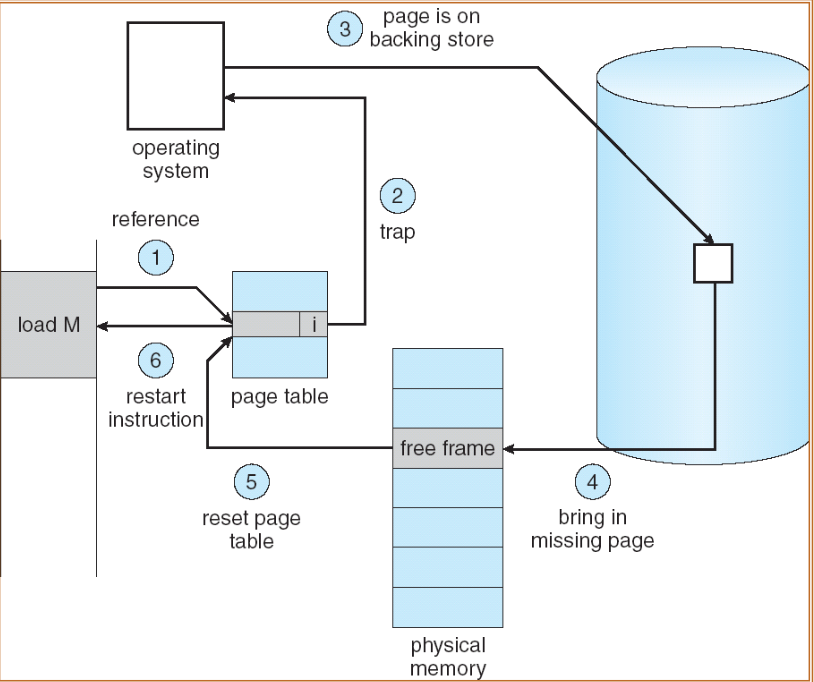

When program accesses an invalid address:

- (MMU) TLB miss; full page table lookup

- (MMU + OS) trap into page fault handler

- (OS) convert virtual address to file offset

- (OS) read data from disk to the memory (blocked)

- (CPU) disk interrupt when read completes

- (OS) updating page table by marking the entry as valid

- (MMU) TLB miss; full page table lookup

- (MMU) TLB update

Figure 2: When program accesses an invalid address

The Dirty Bit

The hardware tracks it with a dirty bit in page table entry

- initialized to 0

- Set to 1 whenever there is a store instruction for the page

The TLB also has a dirty bit

Allocating New Page Frame

If there is no empty page

- select a page to evict

- find page table entries that point to the evicted page

- core map - an array that maps physical page frames back to the table entries

- set page table entry to invalid

- TLB shoot down

- copy back any changes to the evicted page

- write back

- the same for application exit

- dirty bit

Page Eviction Policies

- FIFO: throws out oldest page. Let every page live in memory for same amount of time.

- MIN: replaces page that won’t be used for the longest time

- RANDOM: picks random page for every replacement

- typic solution for TLB

- LRU: replaces page that hasn’t been used for the longest time

- programs have locality, so if something not used for a while, unlikely to be used in the near future

- how to implement LRU? Use a list

- on each use, remove page from list and place at head

- LRU page is at tail

- problems with this scheme for paging

- need to know immediately when each page is used, so we can change its position in list

- many instructions for each hardware access

- 页面访问及其频繁,开销过大

Why we can implement LRU for TLB entry replacement, but not demand paging replacement?

- TLB is purely handled in hardware (MMU)

- TLB has fewer entries (typically 16-512)

Clocking Algorithm

- implementation with the

usebit- initialized to 0 in page table

- set to 1 whenever there is a page access

- when we need to evict a page, we look at the page under the hand

- if its

usebit is 1, clear it and move the hand, repeat - if its

usebit is 0, evict it

- if its

开销分析

- 驱逐开销变大

- 访问几乎没有开销

可以适当增加

usebit 的上限Which bits of PTE entry are used?

- Use: set when page is referenced; cleared by clock algorithm

- Modified: set when page is modified, cleared when page written to disk

- Valid: ok for program to reference this page

- Read-Only: ok for program to read page, but not modify

8: Demand Paging

https://ddccffq.github.io/2025/12/31/操作系统/Demand Paging/