Chapter 5: Thread

Concurrency

Def. Concurrency means multiple activities at the same time.

- network service handles many client requests at the same time

- user-interactive apps and background apps

Concurrency is about dealing with lots of things at once, and parallelism is about doing lots of things at once.

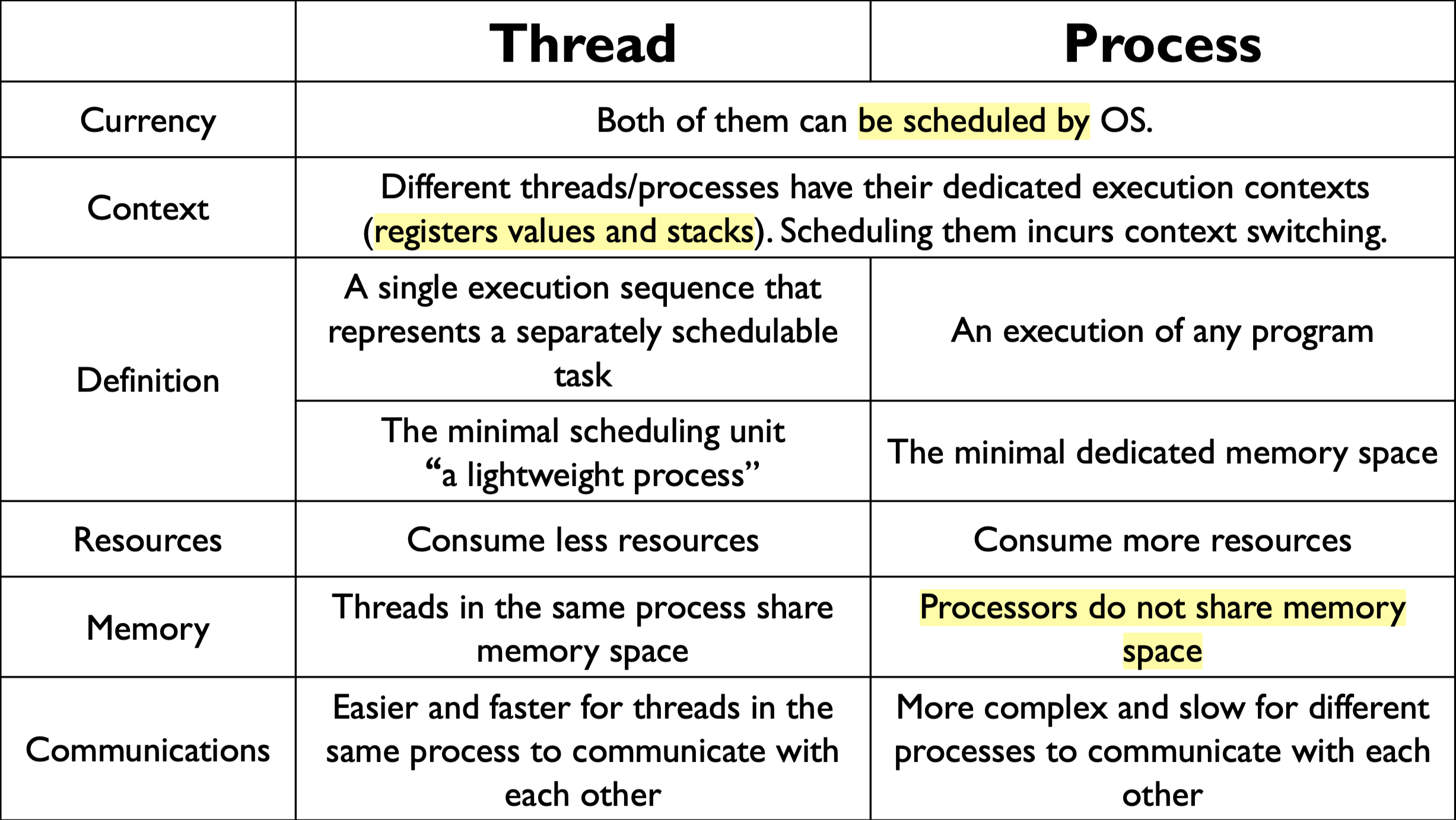

Thread

Def. Thread, a single execution sequence that represents a separately schedulable task. Also known as the minimal scheduling unit in OS.

Execution sequence: each thread executes a sequence of instructions (assignments, conditionals, loops, procedures, etc) just as in the sequential programming model.

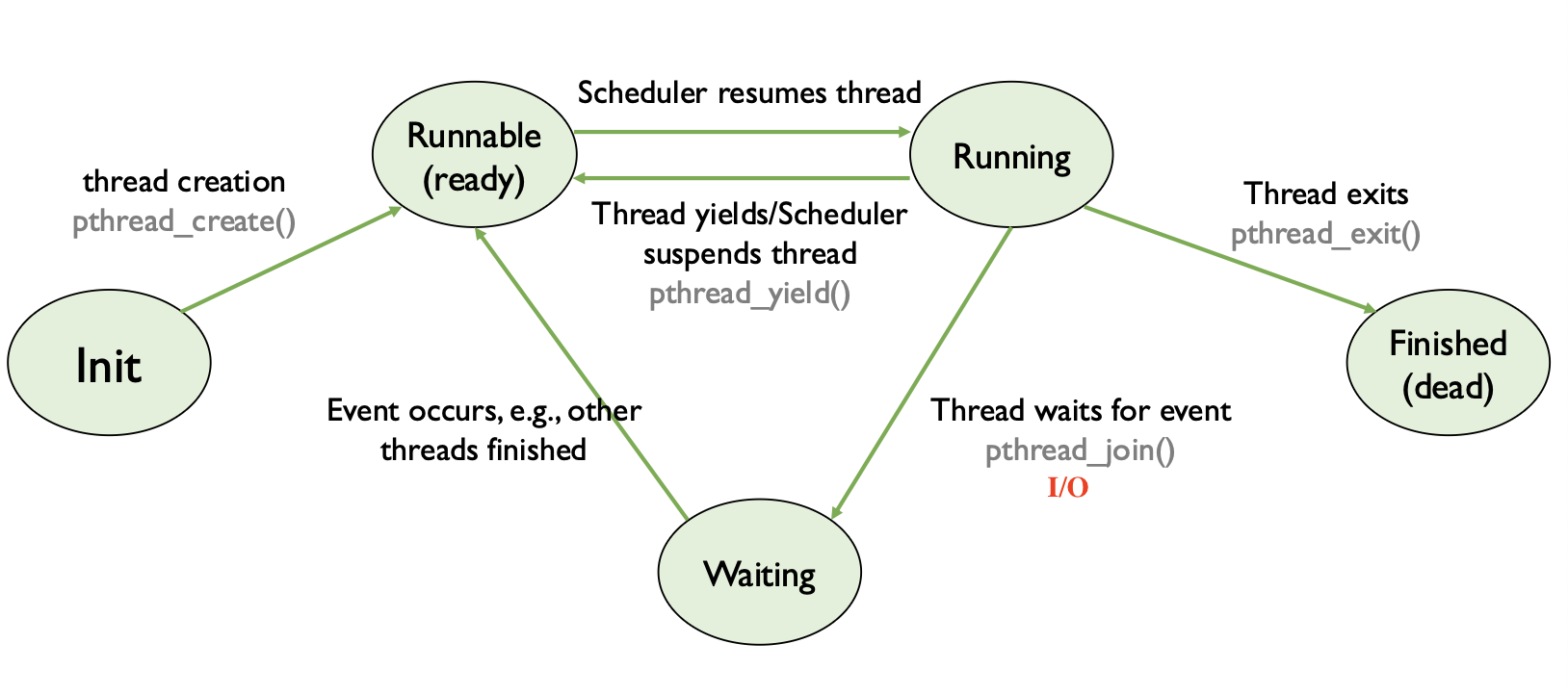

Separately schedulable task: the OS can run, suspend, or resume a thread at any time.

Some thread use cases

- program structure, expressing logically concurrent tasks, like clicking a button to display contents fetched from web

- responsiveness, shifting work to run in the background such as syncing data to the server, data compression and database operations

- performance, exploiting multiple processors (concurrency turns into parallelism)

- extensively used in matrix operations and deep learning

- performance, managing I/O devices

- processors are usually faster than I/O device

- keep the processor busy

Threads in the same process share memory space, but not execution context.

- separate register, stack

- shared data, code, files (FD in chapter 4)

There will be thread context switch.

Because concurrency, thread execution speed is “unpredictable”. Thread switching is transparent to the code.

Thread implementation

Thread Data Structures

TCB

Def. TCB (Thread Control Block)

- Stack pointer: each thread needs their own stack.

- Copy of processor registers

- General-purpose registers for storing intermediate values

- Special-purpose registers for storing instruction pointer and stack pointer

- Metadata

- Thread ID

- Scheduling priority

- Status

How large is the stack?

- In kernel, it’s usually small: \(8\) KB in Linux on Intel x86.

- In user space, it’s library-dependent (

pthread.h).

- Most libraries check if there is a stack overflow.

- Few PL/libs such as Google Go will automatically extend the stack when needed.

TCB(线程控制块)是操作系统为每个线程维护的核心数据结构,记录了线程的状态、寄存器、栈指针等关键信息。每个线程都有自己的 TCB,用于区分和管理线程。

Shared state

线程之间可以共享某些状态(如全局变量、堆内存),但每个线程也有自己的私有状态(如栈、寄存器等)。TCB 记录的就是这些私有状态。

操作系统不会对线程的私有状态(如栈)做物理隔离。也就是说,虽然每个线程有自己的栈空间,但这些空间在同一个进程的虚拟地址空间内,理论上线程 A 可以通过指针访问线程 B 的栈。

OS does not enforce physical division on threads’ own separated states.

If thread A has a pointer to the stack location of thread B, can A access/modify the variables on the stack of thread B? 答案是可以。因为所有线程共享同一个进程的虚拟地址空间,只要线程 A 拿到了线程 B 栈上变量的地址,就可以直接读写。操作系统不会阻止这种行为。

If for two processes, the answer is not. (virtual address and physical address)

Kernel Thread Context Switch

- Voluntary kernel thread context switch

- Turn off interrupts.

- Get a next ready thread.

- Mark the old thread as ready.

- Add the old thread to readyList.

- Save all registers and stack point.

- Set stack point to the new thread.

- Restores all the register values.

- Involuntary kernel thread context switch

- Save the states.

- Run the kernel’s handler.

- Restore the states.

Almost identical to user-mode transfer, except:

- There is no need to switch modes (or stack).

- The handler can resume any thread on the ready list rather than always resuming the thread/process that was just suspended.

Implementing Multi-threaded Processes

- Implementing user-level multi-threaded processes through

- Kernel threads (each thread op traps into kernel).

- User-level libraries (no kernel support)

- Hybrid mode.